Comprehensive Guide to PBR Ground Surface Textures for Realistic 3D Environments

Capturing ground surface textures with high fidelity is a foundational step in producing physically based rendering (PBR) materials that convincingly replicate the complexity of natural environments. Photogrammetry and 3D scanning represent two of the most advanced methods for acquiring detailed and accurate texture data for ground materials such as dirt, sand, gravel, and mixed substrates. These techniques enable the extraction of not only high-resolution albedo maps but also essential supporting channels—roughness, normal, ambient occlusion (AO), height, and occasionally metallic—thereby facilitating authentic responses to lighting and shading within real-time engines like Unreal Engine or offline renderers like Blender’s Cycles. Achieving optimal results demands careful attention to equipment selection, capture environment, and post-processing workflows to ensure minimal distortion, proper calibration, and effective tiling and micro-variation incorporation.

Photogrammetry’s strength lies in its ability to generate dense, detailed texture maps and geometry by analyzing multiple overlapping photographs taken from different angles around a subject area. For ground surfaces, this process begins with selecting suitable capture hardware. High-resolution digital cameras with fixed focal lengths and minimal distortion lenses are preferred, as lens distortion directly impacts the accuracy of texture alignment and subsequent map extraction. Prime lenses in the 35-50mm range (full-frame equivalent) balance field of view and detail capture while reducing barrel or pincushion effects. In situations where budget or portability is a concern, modern smartphones with high-megapixel sensors and RAW capture capabilities can suffice, although careful calibration and lens distortion correction become more critical.

Lighting conditions during capture significantly influence the quality of the acquired data. For ground surfaces, diffuse, even lighting is paramount to avoid harsh shadows or specular highlights that obscure surface detail and complicate albedo extraction. Overcast skies provide ideal natural lighting, offering soft and uniform illumination. When shooting under direct sunlight, the use of portable diffusers or reflectors can mitigate shadow contrast. Consistency in lighting between shots is essential to ensure accurate color reproduction and consistent roughness interpretation. Additionally, avoiding wet or reflective ground conditions helps maintain the integrity of diffuse surface properties, as moisture can alter reflectance characteristics and cause specular anomalies.

The physical setup for photogrammetry of ground surfaces must ensure comprehensive coverage and overlap. Capturing images in a grid or circular pattern with approximately 60-80% overlap between adjacent shots enhances the software’s ability to reconstruct the geometry and texture with high precision. For low-relief surfaces like dirt or sand, incorporating oblique angles can help capture micro-variations and subtle height differences. Ground stabilization—using tripods, sliders, or rigs—can improve image sharpness and consistency, especially when high aperture settings are employed to maximize depth of field. Additionally, placing calibration targets or coded markers within the scene can assist software in aligning images and scaling the reconstructed model accurately, which directly benefits the fidelity of extracted texture maps.

Once image acquisition is complete, photogrammetry software processes the images to generate a dense point cloud, mesh, and texture atlas. The mesh’s resolution should be sufficiently high to capture micro-variations in the surface; however, overly dense meshes can complicate downstream workflows and increase computational overhead. Retopologizing or decimating the mesh while preserving critical detail is often necessary. The texture atlas output from photogrammetry typically contains baked maps such as albedo and normal. However, additional texture channels—roughness, AO, and height—require further authoring or extraction. Utilizing the baked normal and ambient occlusion maps in conjunction with the mesh’s curvature and height data allows for the generation of roughness maps through procedural or AI-assisted workflows. For example, roughness can be inferred by analyzing micro-geometry detail and surface wear patterns captured in the photogrammetric data. Height maps extracted from the mesh geometry enable displacement or parallax effects, essential for realism in close-up views.

3D scanning offers an alternative or complementary approach to photogrammetry, particularly when capturing ground surfaces with complex micro-geometry or loose particulate matter such as gravel or sand. Structured light scanners and laser scanners provide direct depth measurements, resulting in highly accurate geometry reconstructions. The choice between these technologies depends on the scale and detail requirements. Structured light scanners deliver high-resolution scans over smaller areas with precision, while laser scanners cover larger areas but may sacrifice some fine detail. For granular materials, scanning challenges include movement of particles during capture and the scanner’s sensitivity to surface reflectance properties. Employing matte sprays or powders can improve scan quality by reducing specular reflections and enhancing surface uniformity, though care must be taken to avoid altering the intrinsic color or texture characteristics.

Lighting remains critical during scanning as well, especially for optical-based scanners relying on surface reflectance cues. Controlled, diffuse illumination minimizes shadows and ensures consistent data capture. Calibration protocols specific to the scanner model must be strictly followed to maintain spatial accuracy and color fidelity. Post-scan data processing involves cleaning point clouds, filling occlusions, and aligning scans if multiple passes are required. Generating texture maps from scan data often involves projecting high-resolution photographs onto the reconstructed mesh, similar to photogrammetry workflows, to produce accurate albedo and normal maps.

Integration of photogrammetry and scanning data into PBR workflows requires thoughtful optimization to make the textures usable in real-time engines such as Unreal Engine or authoring platforms like Blender. Ground surfaces often demand seamless tiling textures to cover expansive areas without visible repetition. Achieving effective tiling involves identifying and isolating texture patches with minimal unique features that can be blended without obvious seams. Techniques such as edge padding, texture synthesis, and careful UV unwrapping mitigate tiling artifacts. Incorporating micro-variation layers—small-scale noise or detail maps—breaks uniformity and adds realism, especially in large ground planes.

Calibration of texture values ensures physical accuracy within PBR workflows. Albedo maps must reflect true diffuse color, avoiding baked lighting or shadows, while roughness maps accurately represent material microfacet distributions influencing light scattering. Normal maps require orientation and intensity adjustments to match engine conventions and lighting setups. Ambient occlusion maps should be blended appropriately with engine global illumination to avoid double-darkening effects. Height maps serve a dual role: enhancing normal detail through parallax occlusion mapping and enabling geometric displacement where supported. Metallic maps are rarely applicable for natural ground surfaces but may be relevant for mixed environments containing metallic debris or man-made materials.

Optimization is crucial to balance visual fidelity against performance constraints. Texture resolution should be commensurate with the intended viewing distance and engine capabilities; excessively high-resolution textures inflate memory usage without perceptible quality gains. Mipmapping and texture compression settings must preserve detail while minimizing artifacts. In engines like Unreal, custom shader setups can leverage the full suite of PBR maps to simulate complex ground surfaces with layered materials, blending dirt, gravel, and sand dynamically based on slope, moisture, or other environmental parameters. Blender’s node-based material system enables procedural augmentation of scanned textures, allowing artists to tweak roughness or height parameters to fit scene lighting precisely.

In summary, the acquisition of ground surface textures using photogrammetry and scanning demands a rigorous combination of high-quality equipment, controlled lighting, and meticulous capture protocols to secure accurate, distortion-free data. Subsequent processing tailors this data into comprehensive PBR maps, optimized for tiling and engine integration, ensuring that the resulting materials authentically replicate the nuanced interplay of light and surface microstructure fundamental to believable ground surfaces. Mastery of these techniques empowers artists and technical directors to create immersive environments with ground textures that respond convincingly under varied lighting and viewing conditions.

Creating convincing ground surface textures in physically based rendering (PBR) workflows requires a careful balance between artistic flexibility and physical plausibility. This balance is especially crucial when combining organic elements such as grass, moss, and leaf litter with inorganic substrates like cracked earth, compacted soil, and rocky terrain. Achieving this blend through procedural generation and photographic authoring techniques enables artists to produce highly customizable materials that maintain consistency within physically accurate shading models.

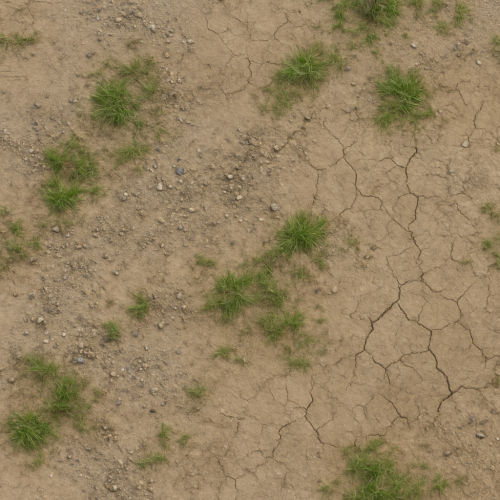

Procedural generation techniques offer strong advantages for ground surface materials due to their inherent scalability and parametric control. When authored procedurally, base color (albedo), roughness, normal, ambient occlusion (AO), and height maps can be generated algorithmically, allowing seamless tiling and infinite variation without obvious repetition. For example, noise functions such as Perlin or Simplex noise can be layered and modulated to simulate the granular, uneven distribution of soil particles, cracks, and small gravel. These noise-based masks subsequently drive roughness and height variations, ensuring that the microsurface response aligns with the underlying geometry of the terrain.

A common procedural approach involves first defining the macro structure of the terrain—large cracks, ridges, and depressions—using fractal noise combined with erosion simulation algorithms. These forms provide the base height map, which is then converted into normal maps to enhance detail without increasing polygon count. The height and normal maps must be carefully calibrated to match the scale of the intended surface, as excessive bumpiness or unrealistic depth can break immersion when viewed up close or under dynamic lighting. Calibration is often performed by comparing procedural outputs against high-resolution photographic references, ensuring that the amplitude of height and normal variations align with real-world ground surfaces.

Once the inorganic base is established, organic elements such as grass patches and moss need to be integrated without sacrificing physical correctness. Procedural authoring excels here by enabling the generation of masks that control spatial distribution and blending between materials. For instance, low-frequency noise combined with slope and curvature maps can simulate areas where moss preferentially grows—usually on shaded, moisture-retentive surfaces such as depressions or rock faces. Similarly, grass patches can be driven by slope gradients and random seed patterns to avoid unnatural uniformity.

To maintain PBR consistency, each material layer must possess complete texture sets. Grass patches, for instance, require albedo maps that capture the subtle color variation of blades and underlying dirt, roughness maps that reflect the microfacet distribution of leaf surfaces, and normal maps that encode the fine structural detail of grass blades. Height maps can be used both for parallax occlusion effects and to generate accurate AO maps that simulate self-shadowing within dense foliage. Importantly, these organic elements rarely require metallic maps, as their surfaces are predominantly dielectric; however, when authoring moss or wet soil, adjustments to roughness and specular intensity are necessary to replicate the dampened reflectivity characteristic of moisture.

Photographic authoring complements procedural techniques by providing realistic base data that can be refined and extended. High-resolution photo captures of ground surfaces—taken under controlled lighting conditions with diffuse illumination to minimize shadows—form the backbone of many PBR texture libraries. These photos are processed to extract albedo, roughness, normal, and height data using specialized software such as Substance Designer, Quixel Mixer, or custom pipelines in Blender. Multi-angle photogrammetry and photometric stereo can enhance normal and height detail by capturing the surface geometry more accurately.

One challenge in photographic authoring is ensuring seamless tiling, as ground surfaces are inherently irregular. To address this, photos are often subjected to edge blending algorithms and frequency separation techniques. Frequency separation allows artists to isolate low-frequency color information from high-frequency detail, enabling independent manipulation of color variation and surface microstructure. Seamless tiling can then be achieved by mirroring, offsetting, and blending edges without introducing visible seams or unnatural repetitions.

Micro-variation is critical to avoid the “texture tiling” artifact common in large terrain applications. For photographic textures, this can be enhanced by layering subtle procedural noise or additional detail maps over the base photo-derived textures. These overlays introduce fine-grained roughness and albedo variation that break up uniformity, especially when viewed at close range. In procedural workflows, micro-variation is inherent due to stochastic noise inputs, but it remains essential to tune noise parameters and layering to prevent over-patterning and ensure natural randomness.

Blending organic and inorganic layers in photographic workflows is often achieved through mask-based layering within material authoring tools. Masks derived from procedural noise, vertex paint, or hand-painted textures control the spatial influence of grass, moss, or leaf litter over the inorganic base. Roughness and normal maps from each layer are combined using physically plausible blending operations—typically linear interpolation for albedo and smooth min/max functions for roughness and height—to preserve consistent light interaction. Ambient occlusion maps are either baked separately per layer or generated dynamically in engines for improved performance.

In both procedural and photographic authoring, optimization for real-time engines such as Unreal Engine or Blender’s Eevee viewport renderer is a key consideration. Texture resolution must be balanced against memory budgets, particularly for large terrain systems. Utilizing texture arrays or virtual texturing can mitigate repetition and allow high-detail textures to be streamed efficiently. Compression artifacts must be minimized since ground surfaces often cover large screen areas and any loss in detail or color fidelity is readily apparent. Baking combined texture maps—such as ambient occlusion integrated within roughness or curvature maps—can reduce shader complexity without compromising visual quality.

Within Unreal Engine, ground surface materials benefit from the engine’s layered material system and procedural node graphs. Height blending can drive tessellation or displacement for enhanced geometric detail, while runtime virtual texturing enables blending multiple material instances without costly draw calls. Height and normal maps authored procedurally or photo-scanned can be imported as tiled textures or virtual textures, with parameter-driven masks controlling the distribution of organic elements. Unreal’s physically based shading model demands precise calibration of roughness and specular parameters, especially when wetness or moisture effects are simulated through dynamic material parameters.

Blender’s shader nodes offer a flexible environment for combining procedural noise with photo-derived textures. Artists can construct node groups that generate masks from curvature, slope, and noise inputs to blend grass and moss layers atop soil or rock bases. Normal and height maps are combined using normal map blending nodes or bump node chains to preserve surface detail. Blender’s viewport rendering supports PBR previews with accurate roughness and specular response, aiding iterative calibration before exporting textures or materials to engines.

In summary, the procedural and photographic authoring of ground surface PBR textures involves an interplay of algorithmic generation and real-world data acquisition. Procedural methods provide infinite variation, precise control, and seamless tiling essential for large terrains, while photographic techniques offer unmatched realism and detail fidelity. By blending these approaches and carefully calibrating texture parameters—ensuring accurate albedo, roughness, normal, AO, and height information—artists can create physically accurate, visually rich ground materials that convincingly portray the complex interface of organic and inorganic elements present in natural environments. Optimization strategies and engine-specific workflows further refine these materials for real-time use, maintaining fidelity without sacrificing performance.

The creation and calibration of physically based rendering (PBR) maps for ground surfaces demand a rigorous approach to accurately represent the complex interplay of materials, lighting, and environmental conditions. Ground textures—whether depicting natural terrain like soil, gravel, or grass, or constructed urban surfaces such as asphalt, concrete, or cobblestones—exhibit a diverse range of physical properties that must be captured through a cohesive set of texture maps. The essential PBR maps include albedo (or base color), normal, roughness, metallic, ambient occlusion (AO), and, often, height or displacement maps. Each map plays a distinct role in defining how light interacts with the surface, but their combined calibration is critical to achieving realism, especially when simulating varying moisture levels and weathering states.

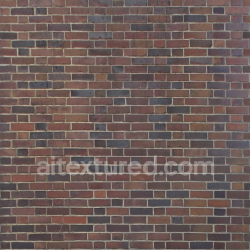

The albedo map is foundational, representing the diffuse reflectance of the surface without shadows or lighting artifacts. For ground surfaces, this map must be carefully acquired or authored to avoid baked-in shadows or specular highlights, as these will interfere with the physically based light calculations within the rendering engine. Photogrammetry or high-resolution photo scans are common sources for albedo data, but raw textures often require correction through color grading and desaturation adjustments to neutralize lighting conditions captured during acquisition. When authoring albedo from scratch, it is vital to maintain a physically plausible color palette that corresponds to the real-world material identity. For example, soil typically exhibits a warm, earthy tone with subtle chromatic variation, while concrete tends toward a cooler, desaturated gray. The albedo should also reflect the effects of moisture changes: wet ground darkens and often saturates colors more intensely, which should be captured either by separate wet-state albedo maps or through shader blending techniques.

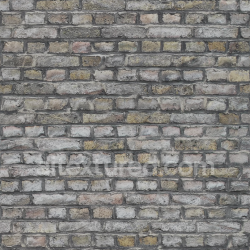

Normal maps encode surface microgeometry, enhancing small-scale lighting variations that cannot be captured by the base mesh alone. For ground surfaces, these details include rough granularities such as pebbles, cracks, and soil clumps or the fine texture of asphalt and concrete aggregates. Creating accurate normal maps often involves baking from high-poly sculpts or generating from height maps. It is crucial to maintain consistent tangent space orientation across the texture set to prevent lighting artifacts. When authoring normal maps, attention must be paid to the balance between micro-variation and tiling; overly repetitive patterns break immersion, so introducing randomized micro-details through overlay layers or blending multiple normal maps is standard practice. In addition, calibration of normal map intensity is essential: too strong, and the surface appears unnaturally bumpy; too weak, and the surface loses dimensionality. Many engines allow for parameter adjustment, but starting with physically realistic normal intensity values based on real-world measurements—such as the average roughness scale of soil or pavement—is advisable.

Roughness maps control the microsurface scattering behavior, dictating how glossy or matte the surface appears. Ground materials exhibit a wide range of roughness values that vary with environmental factors. Dry soil or concrete typically presents high roughness values, diffusing reflections broadly, whereas wet or polished surfaces produce lower roughness, creating sharper specular highlights. Authoring roughness maps requires an understanding of the interplay between surface material and moisture levels. Reference data or real-world measurements can guide the calibration of roughness values. For example, in urban environments, surfaces like asphalt can transition from a dry, highly rough state (~0.8–0.9 roughness) to a wet, glossier state (~0.3–0.5 roughness). This variation is often implemented through layered textures or shader parameters that blend between roughness maps based on environmental inputs such as rain simulation or puddle coverage. When generating roughness maps from photogrammetry, careful desaturation and inversion of glossiness data are necessary to isolate physically accurate microfacet scattering properties, avoiding artifacts from specular highlights baked into source images.

The metallic map is generally less critical for most ground surfaces, as natural terrains and many urban pavements are predominantly non-metallic. However, some urban ground materials—such as manhole covers, metal grates, or embedded railway tracks—require metallic maps to correctly simulate their reflectance properties. In these cases, metallic maps are binary or near-binary, distinguishing metal from non-metal regions. Since metallic behavior radically changes the surface’s reflectance model—from diffuse-albedo-based to specular-dominated—accurate masking is essential to prevent rendering inconsistencies. Ground surface PBR workflows often leave metallic maps empty or set to zero, but it is important to include them in the texture set for completeness and future extensibility.

Ambient occlusion (AO) maps simulate the attenuation of ambient light in crevices and occluded areas, enhancing depth perception and detail. For ground surfaces, AO maps accentuate cracks, depressions between stones, and the subtle topology of uneven soil or gravel. AO is generally baked from high-poly geometry or generated procedurally, then combined with the base color or multiplied in the shader’s lighting equation. Calibration of AO intensity is a balancing act: too strong, and the texture appears unnaturally dark and muddy; too weak, and the surface lacks spatial definition. Additionally, AO maps should be carefully tiled and blended with micro-variation to avoid obvious repetition, especially in large terrain textures. For dynamic weathering states, AO maps can be modulated or blended with wetness effects, as moisture often fills occluded areas, reducing ambient shadowing and altering visual contrast.

Height or displacement maps provide geometric detail by encoding surface elevation differences, facilitating parallax occlusion mapping (POM) or tessellation in modern rendering engines like Unreal Engine or Blender’s Cycles/Eevee. For ground surfaces, height maps enhance realism by defining features such as potholes, ruts, and gravel piles. Accurate height maps are usually derived from photogrammetry or sculpted manually to emphasize directional surface features. Calibration involves normalizing height values to ensure consistent depth scaling relative to the model and aligning them with normal maps to avoid shading discrepancies. Excessive height exaggeration can cause unnatural silhouettes or self-shadowing, so testing in the target engine’s shader pipeline is necessary to strike the right balance between visual impact and performance.

Tiling and micro-variation are critical considerations throughout the texture creation process for ground surfaces. Ground planes often cover large areas, requiring seamless tiling to avoid visible repetition. However, perfect tiling can induce uniformity that breaks immersion. Introducing micro-variation through detail masks, noise overlays, or multi-scale blending of texture layers mitigates this issue. For instance, layering a fine-grained gravel normal map over a broader soil normal map, or blending multiple albedo variations using procedural masks, can simulate natural heterogeneity. In engines like Unreal, material functions and parameter-driven blends help implement these variations dynamically, allowing artists to fine-tune appearance based on environmental parameters such as dirt accumulation or moisture gradients.

Calibration across different moisture and weathering states is vital for ground surface realism. Moisture alters nearly every PBR parameter: albedo darkens and saturates, roughness decreases, and AO contrast diminishes as water pools in crevices. Weathering introduces dirt, moss, cracks, and wear patterns that affect texture maps non-uniformly. Creating separate texture sets or layered masks for dry, wet, and weathered states allows for shader-driven blending, controlled by environmental simulation or manual artist input. Calibration involves iterative adjustment of roughness curves, specular intensity, and AO strength to replicate observed physical phenomena, confirmed through real-time previews and reference comparisons. For example, wet asphalt not only darkens but also exhibits sharper specular highlights due to water’s smooth surface layer, requiring recalibrated roughness and normal maps to simulate subtle water film reflections.

Optimization is another key aspect. Ground textures often cover vast areas and must balance resolution with memory budgets and performance. Using mipmaps, channel packing (e.g., storing roughness, metallic, and AO in a single texture’s R, G, and B channels), and procedural detail blending reduces resource consumption without sacrificing visual fidelity. In Blender’s node-based material system, combining multiple grayscale maps into channels or employing procedural noise generators can optimize texture usage. Unreal Engine supports virtual textures and runtime material parameter adjustments to further optimize large ground surfaces, enabling dynamic weathering and wetness without the need for multiple heavy texture sets.

In conclusion, the creation and calibration of PBR maps for ground surfaces hinge on meticulous acquisition, authoring, and iterative adjustment of albedo, normal, roughness, metallic (where relevant), AO, and height maps. Attention to tiling, micro-variation, and environmental state transitions enhances realism, while careful calibration ensures accurate light-material interactions. Integrating these maps within modern rendering engines like Unreal Engine and Blender requires an understanding of their respective shader frameworks and optimization capabilities, enabling artists and technical directors to craft ground surfaces that convincingly respond to changing weather and lighting conditions across diverse natural and urban scenarios.